The most imminent bottleneck in the current coordinator implementation seems to be the TSA and in particular the milestone selection. In this topic we will discuss potential issues and improvements.

Status quo

- In Compass, the coordinator uses the same TSA as any IRI node to select the MS.

- One MS is issued about every minute.

- If the TSA does not return a tip within the given time limit (60s), the new MS will directly approve the last MS. (This effectively renders the network useless in high TPS scenarios.)

Objectives

Any good MS selection should meet the following criteria:

- Maximize CTPS (confirmed transactions per MS), even during attacks

- Computationally efficient, even in high TPS scenarios

Attack vectors

Double spends are no issue as the Coordinator guarantees security and finality. An attacker can however try to reduce the CTPS of the network or try to increase the chance that the coordinator selects his transactions:

- Parasite Chain

Creating a structure with many transactions but no references of honest transactions, in such a way that the probability that a tip in that structure is selected is maximized. This attack reduces the number of confirmed honest transactions.

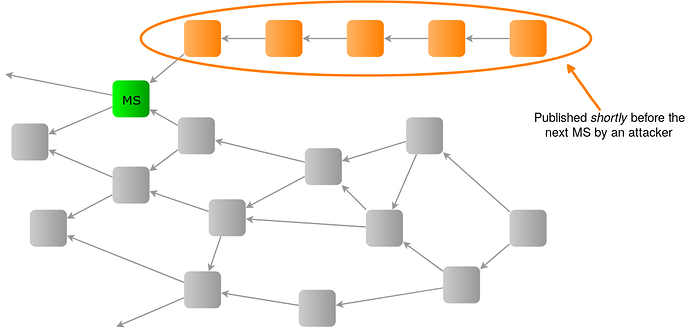

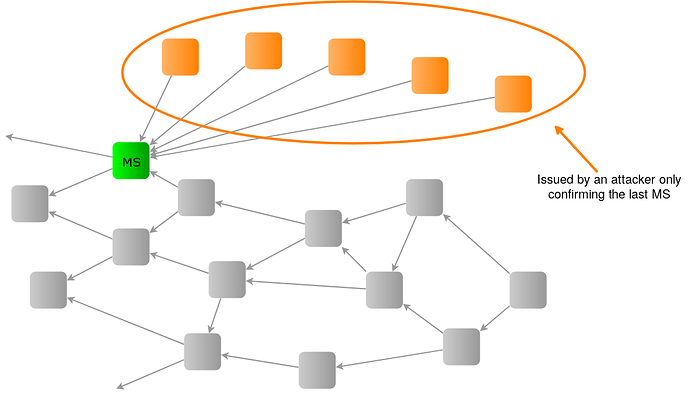

- Blowball

Spamming lazy transactions not confirming any honest transactions, i.e. only confirming the entry point of the MS selection. This attack reduces the overall CTPS of the system.

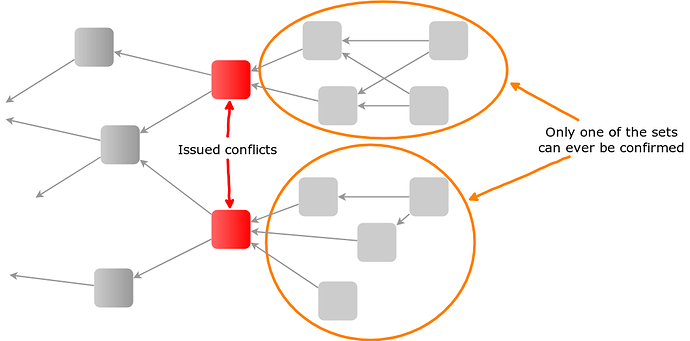

- Splitting

Issuing conflicts/re-attachments shortly after the last MS to split the network (see Conflict Spamming Attack). This is a dangerous attack, since many honest transactions can be invalidated with just two (or very few) conflicts.

- Bootstrapping

When snapshots are used, an attacker could distribute a faked snapshot file with invalid balances. Especially in the case where conflicts stay in the Tangle, this would be very hard to detect for the affected user.

Solutions

Key observation: The milestone selection has different objectives and restrictions than the regular TSA. Thus, it makes a lot of sense to use an optimized and specialized algorithm for it.

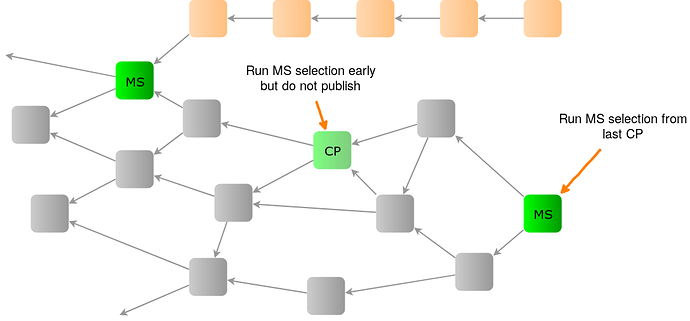

- Parasite Chain

Run the MS selection more often (for example every 10s). The resulting tip is not published, but kept hidden as a checkpoint, that is only used as a starting point of the next MS selection.

Any parasite chain that was not present during the creation of a checkpoint will never be selected. Parasite structures published already earlier can be referenced by honest nodes and do not pose an attack vector. Performing the CP selection in shorter intervals also has computational advantages as the set of transactions on which the selection is run will be much smaller.

In order to avoid getting stuck in “local minima”, multiple CP alternatives can be computed in each step. The final MS can then be selected from all CP alternatives of the last step.

- Blowball

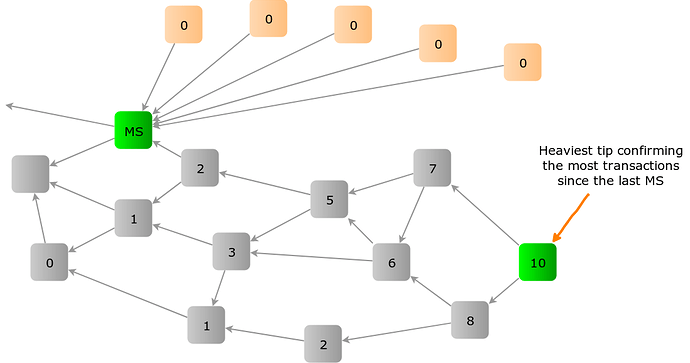

The MS selection strategy must try to maximize the CTPS even in case of blowballs. The optimum here is to select the heaviest branch, i.e. the tip (or pair of tips) that reference the most transactions since the last MS.

Finding the heaviest tip is similar to computing the cumulative weight, but it propagates forward instead of backward which makes it algorithmically a bit nicer: For each incoming transaction, the set of referenced transactions is computed by forming the union of the sets of its directly referenced transactions.

For heuristic algorithm to find the heaviest pair, see also Heavist Branch Selection.

- Splitting

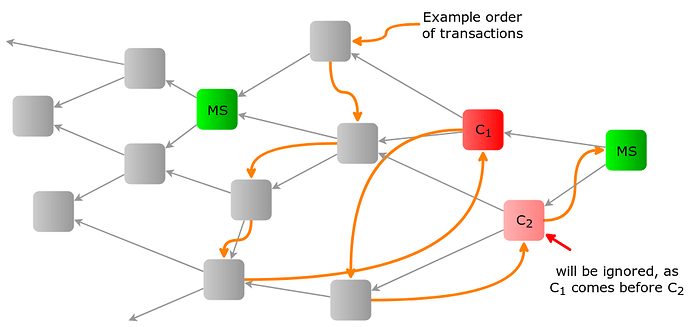

Keep conflicts in the Tangle: Use milestones to order previous transactions and ignore everything but the first conflict. The MSA is still relevant to prevent other attack vectors, but it can be greatly simplified as validity checks are no longer necessary. However, it represents a backward compatible breaking change in the way the balances are computed. See Conflict White Flag.

- Bootstrapping

Since the Coordinator is resolving conflicts (directly or indirectly) anyways, it is also possible that the Coordinator also shares its “view” on the current balances. An easy way to do so would be to include the hash of the current ledger in every MS.

Proposal

In the following we will describe a very simple proposal that combines all of the ideas above:

- Change the balance computation as described in Conflict White Flag. Order the transactions within a MS by postorder depth first traversal (trunk, branch, root).

- For each solidified transaction, the Coordinator keeps track of the set of referenced transactions since the last MS/CP.

- After 10s or after a threshold of incoming transactions (this number needs to be determined in experiments using the actual selection algorithm in order to prevent overloads in high TPS scenarios), the Coordinator selects the transaction referencing the last MS/CP with the highest coweight, i.e. cardinality of the set of referenced transactions, as the next CP. If there is more than one such transaction, select the one that was seen first.

- When a CP was selected and more than 60s have passed since the last MS a new MS is published that references the last MS and the last selected CP.

This marks an initial version of such a proposal. There are several aspects where the protocol could be optimized (for resilience and computational performance). However, it seems likely that the overall aspect of the improvements will be rather small:

- Use a different order for Conflict White Flag.

- Compute more than one CP.

- Do not select the optimal heaviest tip, but use a heuristic.

- Select a pair of tips for the MS.

About heaviest branch selection, I think attempting to look for the heaviest branch is overrated. This idea assumed that different users will more or less have similar hash power but we know that in reality this is not the case.

Now that “white-flag” solution is on the table, I am thinking we should go about even easier solutions. While speaking to @darcy.camargo, he claimed that with “white-flag” URTS can be fine. Not sure that I fully agreed/understood but currently I am in the opinion that we should do the following:

When looking for transactions to approve without promotion: (almost) URTS on a subset. This may mimick some LM properties I think. It will help the tangle grow in a steady direction if I understood correctly. Since we’re using “white-flag” the drawbacks of it are minimal.

When doing promotions (for coordinator checkpoints we need this):

Perform at least one LM weighted walk to get a tip. For the other tip you can do maybe URTS, or almost URTS to approve left behinds.

I just realized that I completely ignored the attack vector of trying to approve a huge subtangle. Trying to validate too many transaction can be considered a DOS attack.

So for coordinator I think we should two walks, one with high alpha (or beta  ) and one with low alpha/beta (or maybe even 0).

) and one with low alpha/beta (or maybe even 0).

Then we can “time-out” on big sub-tangles.

The motivation behind “heaviest branch” selection for milestones is that it by definition maximizes CTPS (which is currently the key metric used to measure the performance of the Tangle). Also it is a very simple algorithm as there are no parameters that need to be tweaked and there is just one single criterion for a good milestone candidate. Further, I don’t see any major complexity/performance issues with it, since it seems to be easier to compute than the cumulative weight and with a bit more elaborate algorithm it can also be updated on-the-fly for incoming transactions.

Because all of this, I see it as a good first candidate.

URTS might be sufficient for the tip selection of regular nodes, but I don’t think it is a good idea for the milestone selection of the coordinator as the variance of the quality of selected milestones (especially in the presence of blowballs) is too large.

It might very well be that almost URTS also performs really good in practice for the milestone selection, but it seems to me to be less resilient against special kind of attacks. Eventually, we would need to test/simulate it to figure out and see how everything performs.

Also note, that the situation is different when comparing the tip selection of regular nodes and the milestone selection of the coordinator. (Almost) URTS seems to be better suited for regular nodes.

1 Like

I don’t fully understand that. If we are going the almost URTS route, why not restrict the tips to select from to the future cone of the last milestone/checkpoint?

Doing a weighted random walk here seems to definitely be a lot of computational work…

With the “conflict white flag” transactions don’t need be validated (with respect to their balance) at all, or they can be validated with one simple check that is only based on the last MS balance and not on their individual past cone. Therefore the impact of “huge subtangles” is drastically reduced.

So let’s assume that all the incoming transactions can be validated by all reasonable powerful nodes, why would you not include as many of them as possible in the next milestone?

Of course, if the “exploration” of such a huge subtangle renders the milestone-selection algorithm computational infeasible (as it is currently the case) this would be an option, but to prevent exactly that we thought about the introduction of checkpoints and the threshold value as described in the OP.

If there would be more transactions arriving than the nodes in the network can validate, it is a different problem.

The motivation behind “heaviest branch” selection for milestones is that it by definition maximizes CTPS (which is currently the key metric used to measure the performance of the Tangle). Also it is a very simple algorithm as there are no parameters that need to be tweaked and there is just one single criterion for a good milestone candidate. Further, I don’t see any major complexity/performance issues with it, since it seems to be easier to compute than the cumulative weight and with a bit more elaborate algorithm it can also be updated on-the-fly for incoming transactions.

Because all of this, I see it as a good first candidate.

True, if you want to approve as many transactions as you can and consider the current CTPS as the metric that defines the health of the tangle… then I can’t really argue. However, I want to challenge the idea that this is what we want. In current reality we know one can generate a huge subtangle full of spam that will approve little to no honest transactions. If we keep on using CTPS as a metric we will consider the tangle as healthy while in reality almost no user will be happy with the tangle.

If you agree with the above, you can read on. If not you can stop right here.

So the question is how to mitigate an attack on honest tx confirm rate, given the assumptions that:

- In the end we have finite resources.

- It is hard or nearly impossible to distinguish between honest and spam txs.

Due to (1) I am not convinced that saying “approving everything” will solve our problems. This will work when we don’t have high TPS. If we aim for TPS that is measured in the thousands, I am not sure…

If we accept (2) then we are in a problem… I have some half-baked ideas about what maybe we can do.

Still the structure of the tangle comes into play. I think the ideas of using local modifiers could be the way to go but I need to have better explanations before I continue pushing for this.

Yes you can use a flag actually, you are correct

That’s my point. I was trying to look for failure modes and how we protect against them. Signatures will always take time to validate. I guess if we move from WOTS it will be better but the problem will still exist. With enough TPS it will happen in real life for sure.

But this is then a different question more in the realm of rate control. It is not the job of the coordinator to control that, especially since in this scenario the gossiping and other network parts are not working reliably anymore. Different mechanisms are need than just confirming transaction that the coordinator saw earlier to provide a fair solution here.

Besides, in the “heaviest branch” approach it is especially easy to cap the number of confirmed transactions per milestone if that is desirable.

We still need to decide what happens in this scenario I believe.

Currently in mainnet if we have more txs then we can validate we simply orphan them to not get DOSed.

I think we should have something similar.

Orphaned transactions are still initially gossiped, require some form of integrity checks from the receiving nodes and still clog the network. Therefore, this is no proper DOS or spam protection at all.

You are talking about a very specific edge case, where:

- the network can still process and gossip the transaction reliably

- the coordinator - running on a rather powerful server - is able to validate more transactions than the rest of the network

- the issued milestones confirm more transactions than the network can validate

Reducing the number of transactions confirmed by a milestones would help here, but it would be a very unfair criterion as those transactions are selected more or less randomly (the ones that happen to be closer to the last MS). Even increasing the PoW difficulty would be fairer here in my opinion.

But sure, this is another pro for the heaviest branch selection as it is trivial to add a limit on the number of confirmed transactions. I’ll add it to the proposal above

I think this should be counted as another attack vector we take into account

So we have:

- Parasite Chain

- Blowball

- Splitting

- DOS attack

Of course with more efficient tip-sel and signature verification, we need to measure what are the actual resources needed to perform a DOS to understand how dangerous this is…

Another thing

We should probably start adding a hash on the milestone that will be of either one of the following options:

- the state diff

- the new ledger state

- of all the txs we confirmed