Just to throw in some alternative idea’s from my own work on tangle sharding.

I do take some different assumptions on some already more researched parts but here it is:

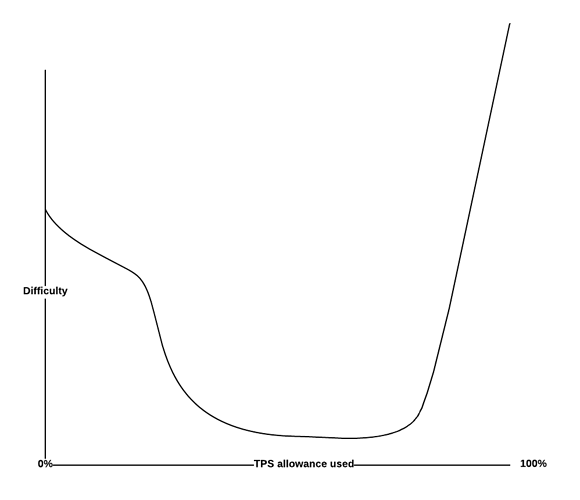

A nodeID represents a unit of processing power. Let’s say 100 mhz + 200 mb memory + 0.2 mbit connection + 500mb storage. This unit corresponds to N tps observing power and allows for X tps. Where N observing power is the local TPS for that tangle shard/slice. Very dense area’s need to ‘fight’ for TPS (= mana) but can have infinite observers. Rate limiting is defined by dynamic PoW where the first 20% of space is with high difficulty. The next 20->80% gets easier gradually and last 20% get exponentially more difficult. Doing this will make it more efficient to use a nodeID to it’s fullest and causes many sybill nodeID’s to be very costly. Alternatively is that nodes must issue transactions that (indirectly) reference their own old transaction. Creating a chain of transactions. The path can be described very efficiently by a Tangle Pathway like encoding (or even just 0=branch, 1=trunk). Doing multiple transactions in parallel therefore requires multiple node identities. Instead of fighting sybill identities, it is exploited.

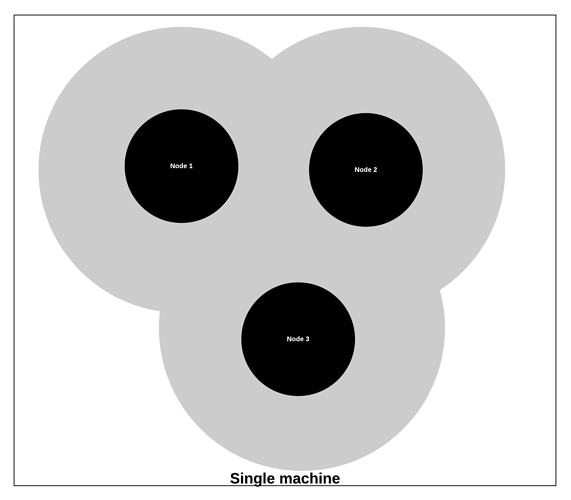

A single machine thus hosts many nodeID’s. Because they are lightweight, a single machine can ‘pixel’ paint a larger area for more processing but also create more exotic shapes which can be very useful for fund balancing on railroads for example.

The problem of eclipse attacked minting is also mentioned in the interview with the NEAR protocol and can be solved by deterministic watchtowers. IE (Hash(NodeID) % shardspace) determines the location watched/observed. The node is not allowed to issue transactions but can detect mistakes. Minting attempts can be broadcasted through the network if detected. (So this is only to prevent minting, not double spends) What you end up with is a localized structure for issuing transactions and a global structure for watching total supply. If two area’s only watched each-other then that is easily detected (non randomly distributed nodeID’s) and others can start watching.

Unfortunately, because a node is a small unit of processing you will quickly have to shard the tangle even if a standard machine can handle the amount of TPS easily. I am not sure if and how this could fit into current research but I think it doesn’t touch too much on what a node ‘is’ in the current settings while providing some mechanism to deal with some of the issue’s of sharding.

Together with the following article, I think this is a good base for sharding in general. (These are not the only concepts I worked on but this post is about defining a node as a fixed set of computation)