TL;DR: The IOTA Congestion Control Algorithm (ICCA) provides fairness, short message delays and high utilization. Attackers, while issuing message above their allowed rate, may slow down the propagation time of messages from honest users and increase the number of solidification requests. In this document, we propose a drop-head policy for the queues of ICCA where old messages are booked, but not gossiped or added to the tip set. We claim that this solution avoids the shortcomings of the blacklisting approach.

PS: If you are already familiar with the ICCA protocol, read directly section “Drop-head queues”.

Preliminaries

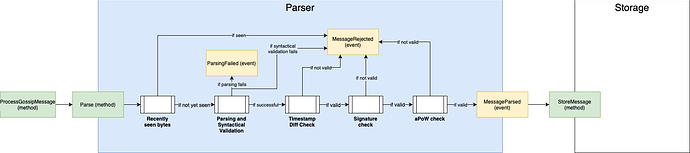

Data Flow

In the IOTA protocol (in this post, with IOTA protocol we refer to IOTA 2.0), messages are exchanged through a gossip protocol, where nodes are connected to each other in a peer-to-peer fashion. Received messages must undergo semantic and syntactic validation, timestamp and signature checks, and the adaptive PoW filter (which may be omitted in the future). Solid messages (which have correctly passed the above checks) go to the booker and, at the same time, are added to the outbox buffer. Discussions concerning the solidifier and the booker are beyond the scope of this post.

IOTA Congestion Control Algorithm

The IOTA Congestion Control Algorithm (ICCA) is a set of rules used to enforce short delays, high utilization of the network and fair rate with respect to access Mana. In particular, ICCA is formed by three main components:

- outbox buffer: this buffer gathers valid, solid messages, which are sorted by timestamp, and is split into several queues depending on the node creating the message.

- scheduler: an algorithm inspired by the Weighted Deficit Round Robin is used to schedule messages from the outbox buffer; specifically, each queue has a value, called deficit, which is incremented every round (queues are visited in a round-robin fashion); once the queue has enough deficit, the waiting messages can be scheduled;

- rate setter: this component, based on the AIMD algorithm, sets the throughput of a node according to the current traffic conditions and to its access Mana;

Once the message is scheduled, it is then added to the tip set and gossiped to the neighbors. Currently, the tip selection algorithm is the Restricted Uniform Random Tip Selection. More information about ICCA can be found in [Cullen, 2020].

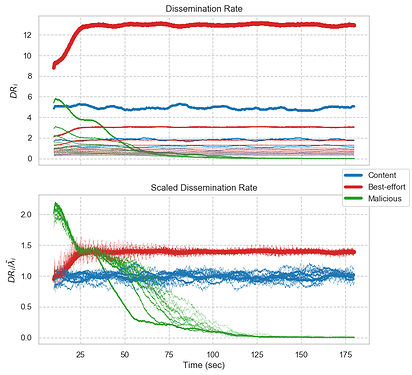

How ICCA deals with attackers

Attack vectors

As proved in [Cullen, 2020], attackers are not able to increase their throuhgput by issuing more messages than the rate setter allows. In fact, when an attacker tries to bypass the rate setter, the exceeding messages will remain backlogged onto the outbox buffers of honest nodes which have not accumulated enough deficit for that specific queue.

While fairness and delay of honest nodes is not affected, such larger delays for the attacker’s messages may affect consistency. In fact, attacker’s neighbors receive and schedule such messages much earlier then nodes many steps away from the attacker. This can potentially affect consistency as honest nodes’ messages may approve attacker’s messages (since these, once scheduled, are added to the tip set). Once honest nodes’ messages are propagated throughout the network, much faster than attacker’s messages, many nodes will see these messages as not solid, since the approved (malicious) messages have not been received yet. Attackers can, hence, inflate the number of solidification requests, lowering the overall network performance.

Blacklisting

In [Zhao, 2021], we proposed a way to limit the effectiveness of the above attack. The idea is to label as “malicious” nodes whose queue lengths are above a certain threshold. To be fair, this threshold is weighted according to the access Mana of the node. When nodes get the label “malicious”, they are considered as blacklisted. If a node is blacklisted, its messages will be dropped on arrival for a given amount of time. Please note that blacklisting is local, which means that the attacker may be blacklisted by only a subset of nodes. In general, this is not a problem as attacker’s neighbors will react fast and blacklist in short time, de facto eclipsing the malicious node. There are two main problems with this approach:

- attacker can find new peers and restart the attack; additional solidification requests will be required to keep the consistency of the network;

- it is critical to correctly define the blacklisting threshold to avoid blacklisting honest nodes; in such an unlucky scenario, honest nodes would experience bad user experience.

Drop-head queues

Rationale

Each node adds messages in the outbox buffer depending on the throughput allowed by the rate setter. We highlight that nodes have to add these messages onto the outbox buffer and are subject to the deficit round robin scheduler. Such a scheduler provides a filter for messages at the source: this means that, when messages arrives at other nodes’ outbox buffers, these nodes will have already built enough deficit to schedule such messages; hence, messages should not be backlogged. With this observation, we experimentally noticed that the outbox buffers contain a very low number of messages on average. Some messages may temporarily accumulate due to the randomness of the communication channels. Otherwise, we should see a relevant number of messages in the outbox buffers only in two scenarios:

- out-of-sync nodes: when nodes are out-of-sync, they will be receiving old (and new) messages, which can fulfill the outbox buffer;

- malicious nodes: as we have seen in the previous section, messages from malicious nodes get backlogged at honest nodes’ outbox buffers.

Proposal

We first set queue sizes proportionally to the access Mana held by the corresponding nodes. Then, when a queue gets full, we use a drop-head policy to discard the first message in the queue (which corresponds to the oldest one).

Remember that messages are boooked before being added to the outbox buffer, and scheduled messages are only gossiped to neighbors and added to the tip set. We claim that the drop-head policy successfully deals with the two scenarios previously considered:

- in case of an out-of-sync node, we are discarding old messages: these messages are likely to have already been received by neighbors; hence, it is possible to avoid to forward them to neighbors; additionally, it is not convenient to validate old messages (as already validated with high probability), hence they should not be added to the tip set;

- in case of malicious nodes, then the discarded messages are the attackers’ ones, hence dropping them is exactly what we want!

Tip selection algorithm

Due to the asynchronous nature of DLTs, some messages may still be scheduled by some nodes and not by others. In case of old messages, it is not an issue as these old messages will simply be dropped on arrival by neighbors since they are duplicates. In case of attacker’s messages, the situation is more complicated. In fact, dropping messages in some nodes may lead to inconsistencies. To limit the probability this event would occur, we propose to modify the tip selection algorithm as follows.

The probability to select a message as a tip is:

- inversely proportional to number of messages (weighted by access Mana) the corresponding issuing node has in the scheduler

- directly proportional to the time the message has been in the tip pool

The first condition allows not to select messages from an attacker inflating its queue; hence, the inconsistencies in the perception of the ledger across different nodes, although existing, should not be relevant as attacker’s messages will not be confirmed. The second condition allows that a burst of messages from an honest node is not perceived as attacker’s messages: in fact, the idea is to wait some (small) time to see if additional messages from that node are arriving, or if the burst was provoked by randomness.

Conlusions

Pros

Our new proposal allows to deal smoothly with resynchronization and to avoid false positive blacklisting. Furthermore, it is possible to tie the queue length per node to the current traffic volume: in such a case, the queue length would provide “for free” an estimation of the minimum mana required to issue messages: less traffic, larger allowed queues, lower amount of mana required to access the network.

Challenges

How can we correctly set the queue lenghts? This is the task we are currently working on. We are building a simple simulator to evaluate the statistics (e.g., average, maximum, standard deviation) of the queues in order to find a good estimation of their lengths. The implementation of such a proposal in GoShimmer is also starting soon according to the insights taken from the simulations.